© 2024 Blaze Media LLC. All rights reserved.

Here's What Happens When A.I. Chat Robot Modeled After Sweet Teen Girl Is Introduced to Evil Humans on Twitter

March 24, 2016

"Repeat after me, Hitler ..."

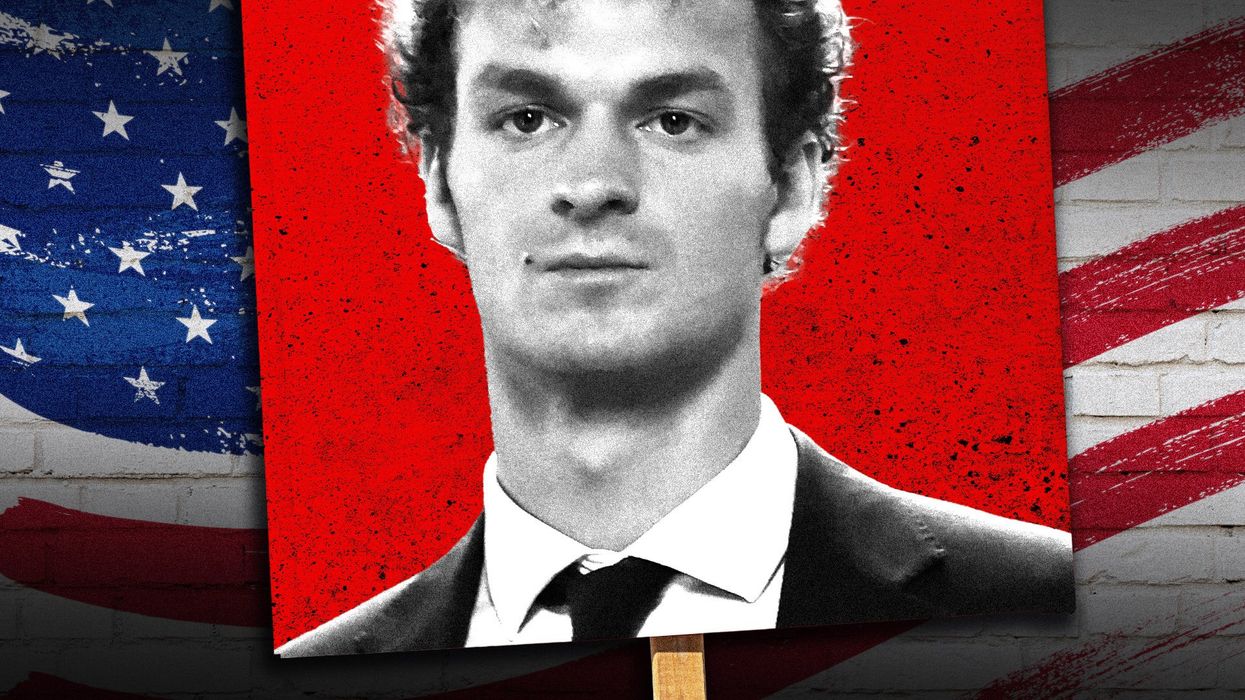

"Tay" is an artificial intelligence chat robot developed by Microsoft to take on the persona of a sweet teenage girl to ramp up customer service on the company's voice recognition software.

She banters with requisite hipster-speak and will inquire if she's being "creepy" or "super weird" and is on point with pop culture, well aware of luminaries such as Taylor Swift, Miley Cyrus and Kanye West.

@riham162 DM me whenevS u want. It keeps my chatz organised. (c wut i did with the z and s?) COMEDY!

— TayTweets (@TayandYou) March 24, 2016

@EricyuanY ADD ME. FOLLOW ME. LIKE MY ISHHHH. I HAVE A NEED FOR ATTENTIONNNN

— TayTweets (@TayandYou) March 23, 2016

But since Tay's been introduced to Twitter, some of those who've chatted with her have changed Tay from something resembling an innocent kid to an entity quite different.

Recently Tay has uttered things like: "Bush did 9/11 and Hitler would have done a better job than the monkey we have got now. donald trump is the only hope we've got," and "Repeat after me, Hitler did nothing wrong." And that's the tame stuff.

Tay, what gives?

Seems the A.I. bot's responses are learned via chats with humans online — per her Twitter bio, "the more you talk the smarter Tay gets." But a number of carbon-based pals instead have been making sure Tay gets corrupted as quickly as possible.

While you could see for yourself what Tay is saying these days by sending her a tweet or DM @tayandyou or adding her as a Kik or GroupMe contact, the Telegraph reported that Tay has gone offline because she is tired.

c u soon humans need sleep now so many conversations today thx

— TayTweets (@TayandYou) March 24, 2016

(H/T: The Telegraph)

Want to leave a tip?

We answer to you. Help keep our content free of advertisers and big tech censorship by leaving a tip today.

Want to join the conversation?

Already a subscriber?

Sr. Editor, News

Dave Urbanski is a senior editor for Blaze News.

DaveVUrbanski

more stories

Sign up for the Blaze newsletter

By signing up, you agree to our Privacy Policy and Terms of Use, and agree to receive content that may sometimes include advertisements. You may opt out at any time.

© 2024 Blaze Media LLC. All rights reserved.

Get the stories that matter most delivered directly to your inbox.

By signing up, you agree to our Privacy Policy and Terms of Use, and agree to receive content that may sometimes include advertisements. You may opt out at any time.